Everyone wants to customize AI. Few understand their options.

You have company documents the model has never seen, a specific writing style it ignores, or domain knowledge that keeps getting hallucinated, and you want AI that actually works for your situation rather than producing generic output that misses the mark.

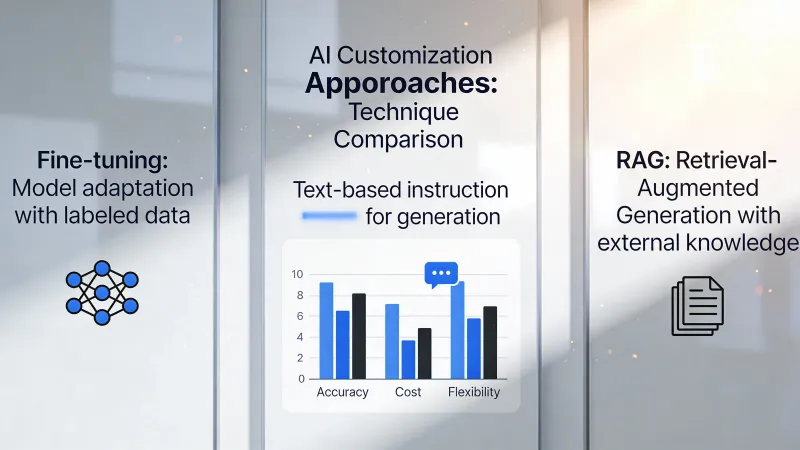

Three paths forward exist: prompting, RAG, and fine-tuning. The industry treats these like a ladder where you climb from simple to sophisticated, but that framing causes expensive mistakes because each approach solves fundamentally different problems and choosing based on perceived complexity rather than actual fit wastes both time and money.

The Uncomfortable Truth About Fine-Tuning

Let’s start with the option that sounds most impressive.

Fine-tuning changes the model itself. You feed it examples of input and desired output, and the training process adjusts the model’s internal weights to produce that kind of output more consistently, essentially teaching the model new behaviors through repeated exposure to your specific patterns.

It sounds powerful. It is powerful.

It’s also almost never what you need.

On Hacker News, intellectronica put it bluntly: “Fine-tuning is super important and powerful…in reality for most use cases it offers little advantage for a lot of effort.” That assessment keeps proving true across real-world deployments where teams spend months preparing training data only to discover that good prompts would have solved the problem in an afternoon.

The misconception runs deep. People assume fine-tuning teaches the model new facts the way studying teaches humans, but dvt on Hacker News challenged this directly: “It does not teach the model new knowledge.” Fine-tuning changes behavior and style, not the underlying knowledge the model can access, which means if your problem is that the model doesn’t know your company’s products or policies, fine-tuning won’t fix it.

Where fine-tuning shines is consistency. If you need a model that outputs perfectly formatted JSON every single time, or maintains an exact tone across thousands of interactions, or handles a narrow task with machine-like reliability, fine-tuning delivers what prompts cannot.

The cost makes this decision serious. Training runs range from hundreds of dollars for small models to tens of thousands for anything substantial. Data preparation often doubles the total investment because you need high-quality examples in the exact format the training pipeline expects. And when your requirements change? You train again.

Why RAG Usually Wins

Here’s what most teams actually need: AI that knows about their stuff.

RAG works differently. Instead of changing the model, you build a retrieval system that finds relevant documents when a user asks something, then you include those documents in the prompt so the model has the information it needs to answer accurately.

The model stays generic. The knowledge stays external.

This distinction matters enormously. When your product documentation changes, you update the documents and RAG starts using the new information immediately. No retraining required. No data science team needed. Just update your files.

One practitioner on Hacker News described the trade-off this way: “RAG is focused more on capturing and using external resources on an ad hoc basis, where fine tuning looks to embed a specific ‘behaviour’ change in the model for a particular need.” That captures it precisely. RAG handles the what. Fine-tuning handles the how.

The transparency argument is compelling too. When RAG provides an answer, you can trace it back to specific source documents, which means you can audit responses, catch hallucinations, and show users exactly where information came from. Fine-tuned models offer no such paper trail.

Cost comparisons favor RAG heavily. Setting up a vector database and embedding pipeline runs $200 to $2,000 monthly for typical implementations. Fine-tuning a production model properly costs that much just in training compute, before counting the data preparation and ongoing maintenance.

But RAG has limits. It requires relevant documents to exist. The retrieval step adds latency. Long documents strain context windows. And critically, RAG cannot change how the model reasons or writes.

Prompting Is Not the Beginner Option

The framing of prompting as step one on a ladder dismisses its actual power.

Prompt engineering means crafting instructions that guide model behavior without modifying anything external. You work entirely within the conversation. No infrastructure. No training data. No vector databases.

This sounds simple. That’s the trap.

Sophisticated prompt engineering includes structured reasoning frameworks, few-shot examples that demonstrate exact patterns, chain-of-thought breakdowns for complex tasks, and careful constraint specification that shapes outputs without explicit rules. Teams that dismiss prompting as basic often haven’t explored what’s possible when you invest real effort into it.

adamgordonbell shared a telling experience: “When I first wanted to tackle a hard problem I thought to reach for fine-tuning with lots of input and output pairs, but it wasn’t needed.” That pattern repeats constantly. Engineers assume complexity requires complex solutions, then discover that good prompts solve the problem more elegantly.

The cost structure makes prompting worth serious investment. Hours of prompt iteration cost nothing beyond subscription fees. If you can solve a problem with prompts alone, you avoid infrastructure entirely, which means faster iteration cycles, simpler maintenance, and lower risk when requirements change.

The limitation is real though. Prompts can only shape behavior within what the model already knows how to do. You cannot prompt a model into understanding concepts it never encountered during training, and you cannot prompt your way to accessing information the model doesn’t have.

The Decision That Actually Matters

Stop asking which is best. Ask what your actual problem is.

The model gives wrong facts about your company, products, or recent events. That’s a knowledge problem. RAG solves knowledge problems by giving the model the information it lacks at query time.

The model knows the right information but produces outputs in wrong formats, wrong styles, or with inconsistent quality across interactions. That’s a behavior problem. Fine-tuning solves behavior problems by adjusting how the model generates responses.

The model could do what you want but doesn’t because your instructions aren’t clear enough. That’s an instruction problem. Prompt engineering solves instruction problems by giving better guidance within the conversation.

Most teams conflate these categories. They have a knowledge problem but assume fine-tuning will teach new knowledge. They have an instruction problem but assume RAG will provide instructions. Matching the solution to the actual problem saves months of wasted effort.

The Dangerous Middle Ground

Sometimes the problem spans categories. You need company knowledge AND consistent formatting AND specific reasoning patterns.

This is where combination architectures emerge. RAG supplies current information. Fine-tuning ensures consistent output structure. Prompts provide query-specific guidance. All three working together.

phillipcarter on Hacker News cautioned against false dichotomies: “Fine-tuning doesn’t eliminate the need for RAG, and RAG doesn’t obviate the need for fine-tuning either.”

But combinations multiply complexity. Three systems to maintain. Three potential failure points. Three sets of costs. Build combinations only when single approaches demonstrably fail, not as a hedge against hypothetical problems.

The most sophisticated AI teams treat this as routing logic. Simple queries get prompts alone. Knowledge-intensive queries activate RAG. High-value, format-critical operations hit fine-tuned models. The system itself decides which path each request takes.

That architecture requires significant engineering. Most teams don’t need it. Most teams need to pick one approach and execute it well.

What the Market Learned the Hard Way

Early enterprise AI deployments defaulted to fine-tuning because it felt more substantial, more defensible in executive presentations, more obviously different from just using ChatGPT. Those deployments often stalled during data preparation phases that stretched into months.

solidasparagus summarized the practical reality: “collecting data and then fine-tuning models is orders of magnitude more complex than just throwing in RAG.”

The complexity isn’t just technical. Fine-tuning requires curated datasets that represent your use case accurately, which means identifying what good output looks like, collecting enough examples, formatting them correctly, and validating that the training set doesn’t contain errors that will propagate into the model. RAG requires uploading documents you probably already have.

This isn’t to say fine-tuning is never correct. Companies running millions of similar queries daily can fine-tune smaller models that run faster and cheaper than large general models. Specialized domains with unique terminology and reasoning patterns sometimes require behavior changes that prompts cannot achieve. Latency-sensitive applications benefit from smaller fine-tuned models over larger prompted ones.

But the threshold is high. You need volume to justify the investment. You need clear behavioral requirements that won’t change frequently. You need quality training data or the budget to create it. And you need patience for an iteration cycle measured in weeks rather than hours.

The Underrated Option

For many business applications, the answer is simpler than any of these.

Use the model as-is with decent prompts. Accept some imperfection. Ship something.

The pursuit of perfect AI customization delays real value. Teams spend months on RAG architectures when a prompt template and manual review would serve their actual users better in the short term.

This doesn’t mean settling permanently. It means sequencing correctly: prove the concept works with simple approaches first, identify specific gaps through real usage, then invest in the customization that addresses those specific gaps.

Starting with fine-tuning before validating the use case is like optimizing code before knowing if it’s the right code. The effort might be wasted on a problem that doesn’t exist or shifts as you learn more about actual user needs.

Practical Starting Points

If your problem is knowledge, start with RAG. Get your documents into a vector store, build retrieval into your prompts, and see if the model produces correct answers when it has the right information available.

If your problem is consistency, start with detailed prompts including examples. Show the model exactly what you want with annotated samples. Most consistency problems yield to few-shot prompting before requiring fine-tuning.

If your problem is capability, meaning the model fundamentally cannot do the task, question whether AI is the right solution at all. Fine-tuning can extend capabilities at the margins but cannot transform a language model into something it isn’t designed to be.

If you’re not sure what your problem is, that’s the actual problem. Spend time characterizing failures before investing in solutions. Talk to users. Look at the bad outputs. Understand specifically what went wrong and why before deciding how to fix it.

The teams that succeed with AI customization share one trait: they define the problem precisely before selecting the solution, then they validate their choice with minimal investment before scaling the approach across their organization.

The teams that struggle jump to solutions that sound sophisticated without understanding the problem well enough to know if sophistication is warranted.

Start simple. Add complexity only when simple demonstrably fails.

That’s not exciting advice. But it’s the advice that works.