I spent three hours last week writing the perfect prompt. Every edge case covered. Every formatting rule specified. Twelve paragraphs of detailed instructions for a simple email template.

The result was worse than a two-sentence prompt I’d thrown together the day before.

This happens more often than people admit. We assume more detail means better output, that longer prompts demonstrate more expertise, that thoroughness always wins. Sometimes it does. But sometimes all that careful crafting just confuses the model or bloats your API bill for no gain.

The real question isn’t which approach is “right.” It’s knowing when each one actually helps.

The Length Paradox

Here’s what research keeps finding: prompt length and output quality have a complicated relationship.

One Hacker News discussion on prompt engineering captured this tension well. Researchers trying to understand the connection between length and performance discovered something counterintuitive: “Prompt length would play a big factor in the performance of this approach. In practice, though, we discovered that it’s actually not as big a factor as we predicted.”

A 410-token prompt and a 57-token prompt both performed reasonably well in their tests. An 88-token prompt underperformed. Length alone explained almost nothing.

What actually mattered? Precision. The same researchers concluded that “the relationship between performance of the estimation and the prompt structure is less about length, and more about ‘ambiguity.’” Open-ended phrasing hurt results regardless of word count.

This matches what practitioners keep discovering. According to analysis by Ruben Hassid, “Prompts exceeding 500 words generally show diminishing returns in terms of output quality.” And it gets worse the longer you go: “For every 100 words added beyond the 500-word threshold, the model’s comprehension can drop by 12%.”

Those numbers vary by model and task. But the principle holds.

What Long Prompts Actually Look Like

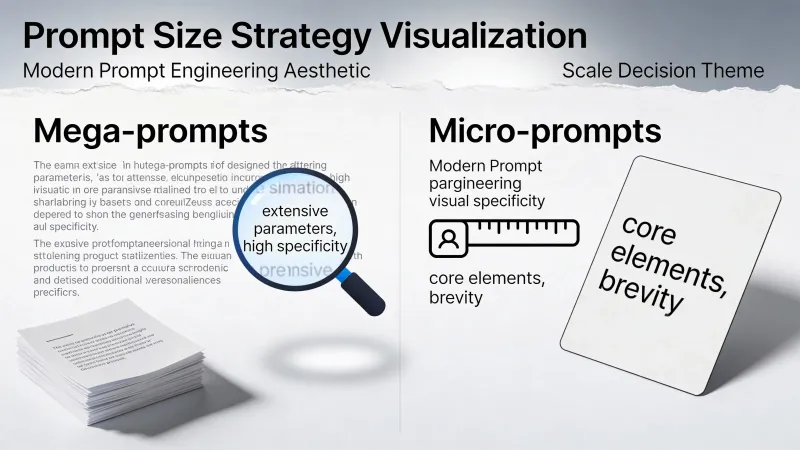

Andrew Ng and his team at DeepLearning.AI call detailed prompts “mega-prompts.” According to their research on prompt sophistication, these are instructions that run “1 to 2 pages long” with explicit guidance covering every aspect of the task.

Ng argues most teams aren’t pushing hard enough: “I still see teams not going far enough in terms of writing detailed instructions.”

A well-crafted mega-prompt might include:

- Specific role and expertise definition

- Background context about the situation

- Multiple examples of desired output

- Explicit formatting rules

- Constraints and things to avoid

- Quality criteria for the response

Here’s what one actually looks like in practice:

You are a senior B2B copywriter specializing in enterprise software.

Your target reader is a VP of Marketing at a mid-size company who

has been burned by overpromising AI vendors before.

Write product copy for DataSync, a data integration platform.

Key differentiator: we're honest about limitations. Our tool

handles 80% of integrations perfectly, and we're upfront about

the 20% that need custom work.

Requirements:

- Headline under 12 words, benefit-focused

- Subhead that acknowledges the skepticism of our audience

- Three bullet points on capabilities

- One bullet point on known limitations (this builds trust)

- CTA focused on seeing it work, not buying

Tone: Confident but never hypey. No "revolutionize" or "transform."That prompt leaves little to chance. The AI knows exactly what’s expected.

What Short Prompts Actually Look Like

On the other end sits the micro-prompt. Brief. Focused. One task at a time.

Write a headline for an honest data integration platform. Max 10 words.Same general task. Fraction of the words. The AI fills in everything else with reasonable defaults.

One developer on Hacker News described their evolution toward brevity: “I’ve stopped writing well-formed requests/questions and now I just state things like: ‘sed to replace line in a text file?’”

Despite the terse input, the model still delivered useful responses. Not every task needs elaborate scaffolding.

The Hidden Cost Dimension

Here’s something people rarely discuss when debating prompt length: every token costs money.

Using current OpenAI API pricing, GPT-4o charges $2.50 per million input tokens and $10.00 per million output tokens. That might seem trivial for one request. But scale it up.

A 500-word prompt runs roughly 650 tokens. A 50-word prompt runs roughly 65 tokens. If you’re making 10,000 API calls per day, the difference between mega and micro adds up fast:

- 650-token prompts: 6.5 million tokens = $16.25/day in input costs

- 65-token prompts: 650,000 tokens = $1.63/day in input costs

That’s nearly $5,300 per year in savings just on input tokens. And that’s before accounting for the larger output that detailed prompts often trigger.

Latency compounds this. Longer prompts take longer to process. If your application needs snappy response times, that 500-word system prompt creates noticeable delay on every request.

For batch processing or single-use prompts, the cost matters less. For production systems handling thousands of requests, it matters enormously.

When Detail Actually Hurts

The assumption that “more is better” breaks down in specific, predictable ways.

First, there’s the lost-in-the-middle problem. Ruben Hassid’s analysis on prompt length calls it out directly: “The single greatest threat to output quality is prompt bloat.” When prompts get long, information in the middle tends to get overlooked. Critical requirements buried in paragraph eight might as well not exist.

Second, contradictions creep in. Write enough instructions and you’ll eventually say “be concise but thorough” or “be creative but follow this exact format.” The model can’t satisfy both. It picks one and ignores the other, or produces muddled output trying to split the difference.

Third, there’s cognitive overhead. One practitioner in a Hacker News thread on prompt playbooks noted their experience: “Sometimes I get the feeling that making super long and intricate prompts reduces the cognitive performance of the model.”

Their solution? “My usage has converged to making very simple and minimalistic prompts and doing minor adjustments after a few iterations.”

The model isn’t a human colleague who appreciates context. It’s a pattern matcher. Too many patterns to match means worse matching overall.

When Detail Actually Helps

None of this means mega-prompts are wrong. They solve real problems.

When the output goes directly to customers without review, you need control. A loose prompt that occasionally produces off-brand copy is fine for drafts. It’s disastrous for automated email campaigns.

When you’ll reuse the prompt hundreds of times, investment pays off. Spending two hours perfecting a prompt you’ll run 5,000 times is smart math. Spending two hours on a one-time request is not.

When multiple parts need coherence, one prompt beats several. A landing page needs headline, subhead, body, and CTA that all work together. Separate micro-prompts might each be good individually but clash when combined.

Another developer in the same Hacker News thread shared their approach: “Some of my best system prompts are >20 lines of text, and all of them are necessary.”

Their method for building these prompts was telling: “every time the model does something undesired, even minor I add an explicit rule in the system prompt to handle it.” The length came from real needs, not theoretical thoroughness.

That’s the key distinction. Long prompts that grow organically from actual failures work. Long prompts written to cover hypothetical edge cases often just add noise.

The Chain Alternative

There’s a middle path that avoids the mega-prompt trap while keeping the control you need.

Paul Shirer argues in his analysis of prompt strategies that chain prompts beat mega prompts for most tasks. His reasoning: “You aren’t stuck with the result of a mega prompt that might have gone astray due to an overlooked detail.”

With chains, each step is a short, focused instruction:

- “Generate five headline concepts for this product.”

- “Take concept #3 and write three variations.”

- “Now write supporting copy for the best variation.”

- “Add a CTA that matches the headline tone.”

Each output informs the next. If step two goes wrong, you fix it before step three, not after the entire mega-prompt has run. As Shirer puts it: chain prompts work like “inching closer with each shot, adjusting your aim according to where the last arrow landed.”

The tradeoff is speed. Chains require multiple round trips. For interactive use, that’s fine. For batch processing thousands of items, the overhead adds up.

Reading the Signals

How do you know if your prompt is too long or too short?

Too long:

- Output ignores instructions you specifically included

- You’re repeating the same point in different words

- You catch yourself contradicting earlier sections

- Most sentences are “just in case” rather than essential

- The AI seems confused about what you actually want

Too short:

- Output is generic when you needed specific

- Same follow-up corrections every time

- Wrong audience, tone, or format assumptions

- You keep adding “also include…” as afterthoughts

The pattern for most tasks: start short, add only what’s needed based on actual failures, stop when output is good enough.

A Framework That Works

Forget the mega-vs-micro debate. Ask these questions instead.

How important is this output? Critical outputs that ship directly justify more detailed prompts. Drafts and explorations don’t need them.

How reusable is this prompt? Twenty uses justify thirty minutes of crafting. One use justifies two minutes.

Can you iterate? If you can easily run again with adjustments, start short. If it’s a batch job that needs to work the first time, front-load the detail.

What’s the cost context? High-volume API usage means every token matters. Single requests mean the difference is negligible.

Do you know exactly what you want? Certainty favors detailed prompts. Exploration favors short ones with quick iteration.

The Relevance Test

Here’s the simplest possible rule: look at each sentence in your prompt and ask whether removing it would change the output.

If yes, keep it. Relevant context improves results.

If no, cut it. Irrelevant filler just dilutes attention.

A practitioner in discussion about prompt engineering summarized this perfectly: “Irrelevant context is worse than no context, but it doesn’t mean a long prompt of relevant context is bad.”

That distinction matters more than any word count guideline. A 50-word prompt packed with fluff performs worse than a 300-word prompt where every word earns its place. A 300-word prompt full of padding performs worse than a focused 50-word prompt.

Measure by relevance, not length.

What Actually Changes Behavior

The most effective prompts share a quality that has nothing to do with length. They reduce ambiguity.

“Write something good” is short but useless. “Write a 100-word product description for a stainless steel water bottle, targeting outdoor enthusiasts, emphasizing durability” is longer but every word serves a purpose.

The research bears this out. Going back to that Hacker News analysis, the core insight was that prompt engineering is about linguistic precision: “say what you mean in the most linguistically precise way possible.”

Not more words. Not fewer words. The right words.

Some tasks need many right words. Others need few. The skill isn’t picking a side in the mega-vs-micro debate. It’s learning to write exactly enough for each situation, and recognizing which situation you’re actually in.

Start with what the task needs. Add what your failures teach you. Stop when the output is good enough. The prompt length that results is the prompt length that was right.