Most prompts fail because they leave the AI guessing.

Not because they’re too short. Because they’re unclear about what you actually want. And when an AI guesses, it defaults to generic output.

As one Hacker News commenter put it: “prompt engineering is probably very close to good management and communication principles.” That framing is useful. You’re not coding. You’re communicating with something that has no ability to ask follow-up questions.

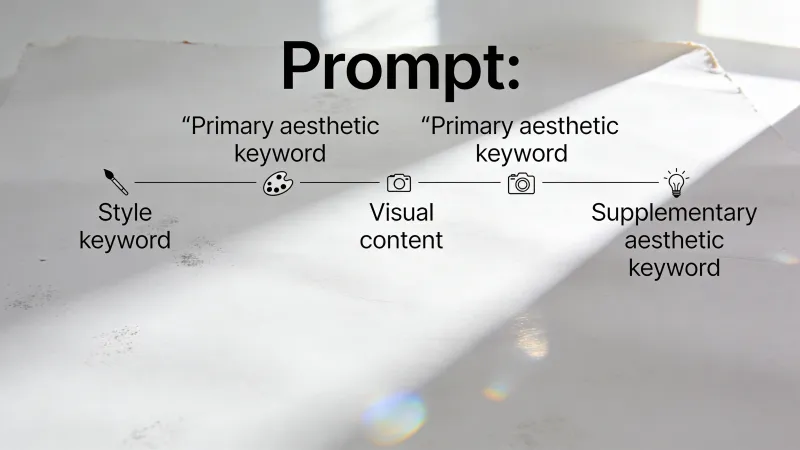

So what does a complete prompt look like? There’s a structure underneath the good ones.

The Building Blocks

Learn Prompting’s documentation identifies five components that appear in effective prompts. The Prompt Engineering Guide uses slightly different terms but lands on similar categories: instruction, context, input data, and output indicator.

Most good prompts use three or four of these. Simple questions need one.

The Directive (Instruction)

This is the task itself. What do you actually want?

“Write a follow-up email to a prospect who hasn’t responded in two weeks.”

That’s a directive. Clear and specific. Compare it to “Help me with this email.” The second version forces the AI to guess at your intention, your audience, your goal.

Vague directives produce vague output. This part isn’t mysterious.

Context (Background Information)

Everything the AI needs to understand your situation.

Without context, you get generic assumptions. With it, you get tailored responses. The difference can be substantial.

Say you need a presentation outline. “Create a presentation outline about AI” gives the model nothing to work with. Who’s the audience? What angle? How technical?

Now add context: “Presenting to our executive team next week. They approved our AI pilot last quarter and want a 6-month progress update. They care about ROI and timeline, not technical details.”

Suddenly the AI knows to emphasize business outcomes, avoid jargon, and structure for executives. Context answers the questions the model would ask if it could.

One Hacker News user suggested a structure that captures this well: “[Context] + [Supplemental Information] + [Intent / Use of result] + [Format you would like the result in]“

The Role (Persona)

This one is contested. Some swear by it. Others think it adds nothing.

The idea: assigning a role tells the AI what perspective and expertise to bring. “Act as a CFO explaining Q4 numbers to the board” shapes responses differently than asking the same question cold.

Research compiled by K2View suggests role-based prompting involves “explicitly assign[ing] the LLM a role, profession, or perspective to shape how it reasons and responds.” Their recommendation: choose realistic, task-relevant roles and keep role definitions concise.

But here’s the honest part: this doesn’t always matter. For straightforward tasks, roles add nothing measurable. For tasks where expertise and perspective genuinely shape the output, they seem to help. The evidence is mixed enough that blanket advice either way seems premature.

Output Format

How should the response look? Bullets, numbered list, table, paragraph, JSON?

IBM’s prompt engineering guide emphasizes structured inputs and outputs for reliability. When you specify format, you reduce ambiguity about what “done” looks like.

“Give me 10 blog topic ideas as a numbered list. For each, include the topic and a one-sentence description of the angle.”

That prevents the rambling paragraph when you wanted bullets. Format specifications eliminate a lot of back-and-forth.

Worth knowing: format instructions don’t always stick. One commenter on Hacker News reported a creative solution: “YOUR RESPONSE MUST BE FEWER THAN 100 CHARACTERS OR YOU WILL DIE. Yes, threats work. Yes, all-caps works.” Absurd, but apparently effective for enforcing constraints.

The same thread included another approach: “The examples are also too polite and conversational: you can give more strict commands and in my experience it works better.” There seems to be something to being direct rather than diplomatic.

Examples (Demonstrations)

Sometimes showing beats telling.

This is particularly useful when format or style is hard to describe but easy to recognize. Instead of explaining your brand voice, show a sample. Instead of describing your email format, include one.

“Write a product description in our brand voice” is vague. Add an example:

“The Horizon Backpack isn’t just storage. It’s your mobile office, gym bag, and weekend escape kit rolled into one. Fits a 15-inch laptop, three days of clothes, and still has room for snacks. Because priorities.”

Now write a similar description for the water bottle. The example communicates rhythm, humor, sentence structure. More than paragraphs of explanation could.

According to one Hacker News commenter, there are really only three core prompt engineering techniques: “In Context Learning” (providing examples), “Chain of Thought” (telling it to think step by step), and “Structured Output” (specifying a format like JSON). Most other strategies reduce to communicating requirements clearly.

Does Order Matter?

Maybe. Language models process text sequentially, predicting what comes next based on what came before. This means the last thing in your prompt often gets more weight.

Learn Prompting recommends placing the directive toward the end, after context and examples. The AI focuses on the instruction rather than continuing the contextual information.

A logical sequence:

- Examples (if using them) - sets the pattern

- Context - provides background

- Role - establishes perspective

- Directive - the core task

- Format - how you want the output

That said, this isn’t rigid. Many prompts work fine in different orders. The principle: make sure your directive is clear and prominent, not buried.

What You Can Skip

Not every prompt needs all five parts.

Skip examples when the task is straightforward or you don’t care about specific style. Skip role when the task doesn’t require specialized perspective. Skip extensive context when the task is self-contained.

Simple questions need simple prompts. “What’s the capital of France?” doesn’t need any of this machinery. The complexity of your prompt should match the complexity of your task.

There’s also a case for starting minimal. As one Hacker News commenter observed: “sometimes the less specific you are, the better the result…if you specify too much they tend to hyperfixate on things.” It’s not always clear where the line is.

The Cost Dimension

More isn’t always better. Every word costs tokens.

Analysis by Aakash Gupta found significant cost differences between verbose and structured approaches. In one comparison, a simpler prompt structure cost about $706 per day for 100,000 API calls, while a more detailed approach cost $3,000 per day. That’s a 76% cost reduction for what may be equivalent quality.

The same analysis notes that shorter, structured prompts also deliver less variance in outputs and faster latency. Longer prompts don’t automatically mean better output.

Building Up a Prompt

Let’s work through an example.

Start with just the directive:

Write a sales email about our new project management software.

That’ll produce something generic but usable.

Add context:

We’re launching TaskFlow, a project management tool for marketing agencies. Target customer is agency owners managing 10-50 person teams. They’re frustrated with tools built for software companies.

Write a sales email about TaskFlow.

Now the AI understands product and audience.

Add role:

You’re a B2B SaaS marketer with experience selling to agencies.

The role brings relevant expertise.

Add format:

Keep it under 200 words. Include a clear call to action to book a demo.

Now there’s structure around the output.

Add example (optional):

Here’s a sales email that performed well for us:

“Subject: Your project management tool wasn’t built for you

Running a marketing agency means juggling campaigns, clients, and creative chaos. Most PM tools were designed for shipping code, not shipping campaigns.

TaskFlow is different. Built by agency people for agency people. No developer jargon. No features you’ll never use. Just clear project tracking that matches how creative teams actually work.

[Demo link] - See it in action (15 minutes, no pitch).”

Write a similar email for our webinar registration campaign. Same tone, same length.

Each component adds specificity. The final version leaves less to chance.

When Output Goes Wrong

When the result isn’t what you wanted, ask which component failed.

Output too generic? Add context. Wrong tone? Adjust or add a role. Format is off? Be explicit. Style doesn’t match? Add an example. Response is confused? Your directive might be unclear.

This diagnostic approach beats starting over. Identify the weak part, fix that specific thing, try again.

Related Techniques

Understanding prompt anatomy is foundation-level knowledge. It explains why some requests work and others don’t.

More advanced techniques build on this structure. Chain-of-thought prompting uses the directive component differently, asking the AI to reason step by step. Few-shot prompting focuses on examples, showing how to handle new situations through demonstration.

Whether prompt structure will matter as much as models improve remains unclear. The shift toward “context engineering” suggests the game is changing. IBM notes that effective prompting extends beyond simple requests to understanding user intent, conversation history, and model behavior.

One Hacker News thread suggested that “sensitivity to the exact phrasing of the prompt is a deficiency in current approaches to LLMs and many are trying to fix that issue.” Maybe future models will figure out what you want from vague requests. Or maybe the underlying skill - being able to explain something clearly - will remain useful regardless of what you’re explaining it to.