You have a task for an AI. Do you just ask, or do you show it what you want first?

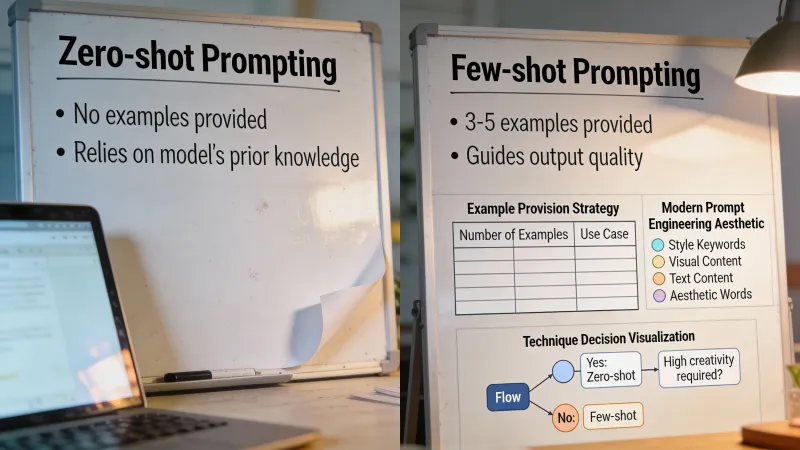

This is the zero-shot vs few-shot question. Zero-shot means asking directly without examples. Few-shot means giving one or more examples before your actual request. The names come from machine learning research, but the concept is simple: show vs tell.

Most people either never use examples or use them for everything. Both are wrong. The answer depends on what you’re asking for, which model you’re using, and whether format matters more than content.

The Examples-First Approach

Few-shot prompting works by pattern matching. You show the AI what a good output looks like, and it mirrors that pattern for your new input. This is especially powerful when you need a specific format, tone, or structure that’s hard to describe in words.

Say you need product descriptions for an e-commerce site. You could explain that you want short, punchy descriptions with key features in a specific order. Or you could just show one:

Ceramic Travel Mug Keeps coffee hot for 4 hours. Fits in standard cup holders. Dishwasher safe. Available in 6 colors.

Then ask for a description of a stainless steel water bottle. The AI now has a template. It matches the length, the sentence structure, the level of detail. No ambiguity about what you’re looking for.

One Hacker News commenter put it simply: “In general, showing an example of correct output (one shot prompting) can greatly improve output format consistency.”

That’s the core benefit. Consistency. When you need the AI to produce multiple outputs in the same format, examples beat instructions almost every time.

When Examples Change Everything

The gains from few-shot prompting can be dramatic. In one medical coding case study, adding example-label pairs to prompts improved accuracy from 0% to 90%. That’s not a typo. The same model went from completely wrong to nearly perfect just by seeing a few examples first.

But that’s a best-case scenario. Research compiled by PromptHub shows diminishing returns after about two to three examples. You see major gains after the first couple, then a plateau. Adding ten examples instead of three rarely helps and might actually hurt by cluttering the prompt.

A study from the University of London on automated bug repair found something counterintuitive: their MANIPLE framework achieved a 17% improvement in successful fixes by optimizing which examples to include, but adding more examples sometimes degraded performance. The prompt got noisier, not smarter.

The Surprising Case Against Examples

Here’s where it gets interesting. The rules are changing with newer reasoning models.

OpenAI’s o1 series and similar reasoning-focused models actually perform worse with examples in many cases. Research cited by PromptHub found that 5-shot prompting reduced o1-preview’s performance compared to a minimal prompt baseline. DeepSeek-R1’s documentation explicitly states that few-shot prompting “consistently degrades its performance.”

Why? These models are designed to reason through problems themselves. Giving them examples can constrain their thinking or send them down the wrong path. They work better when you describe what you want and let them figure out how to get there.

This matters because the field is moving toward reasoning models. If you’re using o1, o3-mini, or similar, try without examples first. Add them only if the output format needs correction.

The Model-by-Model Problem

There’s another wrinkle. The best examples for one model might not be the best examples for another.

Aickin, founder of Libretto, ran experiments testing whether the best-performing examples in one model would also be best in another. The finding was clear: “Most of the time, the answer was no, even between different versions of the same model.”

The practical implication is rough. You probably need to optimize examples on a model-by-model basis, and redo that work whenever a new model version releases. Those three perfect examples you crafted for GPT-4 might not transfer to GPT-4o or Claude 3.5.

For most people, this means keeping examples simple and not over-optimizing. The more specific your examples, the more likely they are to break when you switch models or the model updates.

Show vs Tell: When Each Works

Forget rigid rules. Think about what you’re actually trying to accomplish.

Examples work best when:

Format is everything. If you need JSON, markdown tables, or a specific template filled out, one example often beats paragraphs of instruction. The AI sees the structure and replicates it.

Style is hard to describe. “Write like our brand voice” is vague. Showing three sentences in your brand voice is concrete. The pattern is easier to match than the description.

You’re doing repetitive tasks. Need twenty product descriptions? Give two examples, get eighteen more in the same format. The consistency compounds.

The model is a standard LLM like GPT-4 or Claude. These models are trained to follow patterns. They respond well to show-don’t-tell approaches.

Skip examples when:

The task requires reasoning. Math problems, logic puzzles, code debugging, strategic analysis. For these, explain what you want and let the model think. Adding examples can constrain its approach or introduce errors from your example’s specific solution.

You’re using a reasoning model. o1, o3-mini, DeepSeek-R1. These models internally generate their own chain of thought. Examples can interfere with that process.

The task is straightforward. “Summarize this article in three sentences” doesn’t need an example. The instruction is clear enough. Adding examples just burns tokens without improving output.

You need creativity, not consistency. If you want the AI to surprise you, examples constrain the space of possible outputs. You’re showing it what’s allowed instead of what’s possible.

The Real-World Test

Theory is nice. Practice is better.

Run a simple experiment before committing to an approach. Take your task, run it three times zero-shot, three times with two examples. Compare the outputs. Did the examples improve quality? Did they improve consistency? Did they change anything at all?

Often the answer is: “The examples helped with format but not with content quality.” That’s useful information. It tells you when to invest in examples and when to invest in better instructions instead.

Some practitioners find that the sweet spot is one example for format plus detailed instructions for everything else. You get the structural consistency from the example while letting the instructions guide the substance.

The Cost Calculation

Examples aren’t free. Every example you add costs tokens on every API call. With Claude Haiku or GPT-4o-mini, the cost is negligible. With GPT-4 or Claude Opus, it adds up.

The math changes based on volume. If you’re running a prompt once, add as many examples as you want. If you’re running it thousands of times per day, every token matters.

Minimaxir noted on Hacker News that the economics favor few-shot prompting more than ever: “You will often get better results with few-shot prompting (with good examples) on a modern LLM than with a finetuned LLM.” Input tokens have gotten cheap, especially with models like Claude Haiku. The cost of adding examples has dropped dramatically.

But the comparison isn’t just about token cost. Fine-tuning a model costs 4-6x more than standard API usage, according to OpenAI’s pricing. If you’re choosing between fine-tuning and running lots of examples, the examples often win on cost even with the extra tokens.

The Dangerous Middle Ground

The worst approach is adding examples without thinking about whether they help.

Cargo-cult prompting. You saw somewhere that “always add examples” and now every prompt has three examples whether they’re relevant or not. The AI gets confused about what’s instruction and what’s context. The output gets worse, not better.

Or the opposite: you’ve internalized that prompts should be “clear and direct” so you never show examples even when format matters. You end up writing paragraph-long descriptions of table structures when one example would communicate the same thing in two lines.

The skill isn’t in memorizing rules. It’s in recognizing which situation you’re in.

Mixing Approaches

The examples vs instructions choice isn’t binary. You can explain what you want and then show it.

For document extraction, you might write: “Pull the customer name, email, rating, and main feedback point from these forms. Format as JSON.”

Then add one example showing the format. The instruction explains the task. The example nails the output structure. You get both clarity and consistency.

This hybrid approach works especially well when: the format is specific (use the example), but the reasoning behind decisions matters (use the instruction). Neither alone would be enough. Together they cover different aspects of what you need.

What Actually Matters

After all the research and experimentation, a few things are clear.

Examples help most with format, consistency, and style. If those are your priorities, use them. If content quality and reasoning are your priorities, examples might not help and could hurt.

Two to three examples is usually enough. More than that rarely adds value and might add noise. The PromptHub research shows diminishing returns kick in fast.

Test on your actual task with your actual model. The research averages hide huge variation. What works for medical coding might not work for marketing copy.

And watch what happens when models update. Your carefully optimized examples might need recalibration. The best few-shot prompt from last month might be average today.

If you take away one thing, let it be this: the difference between zero-shot and few-shot isn’t about which technique is “better.” It’s about whether pattern-matching or reasoning serves your task. Sometimes you want the AI to copy a structure. Sometimes you want it to think. Knowing which is which is the whole game.